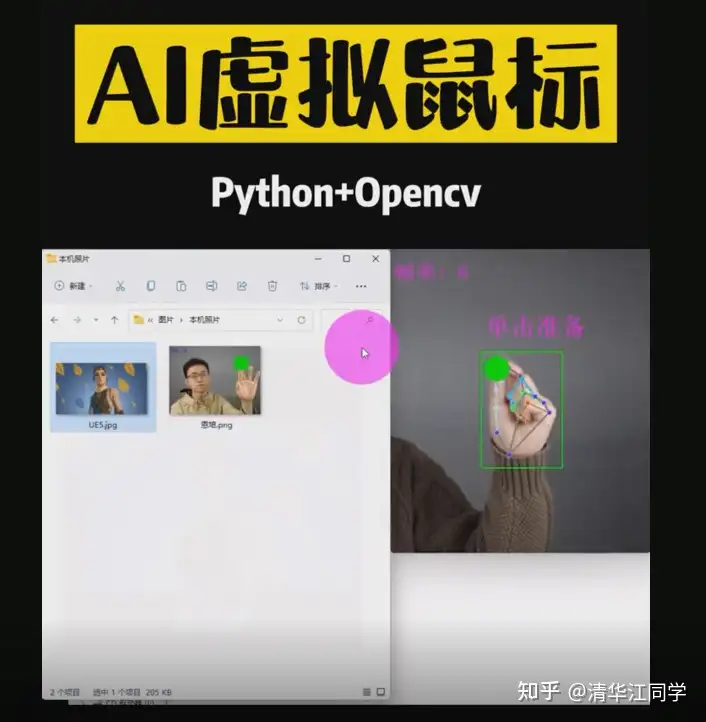

AI虚拟鼠标--详细注释解析恩培大佬作品3

感谢恩培大佬对项目进行了完整的实现,并将代码进行开源,供大家交流学习。

感谢恩培大佬对项目进行了完整的实现,并将代码进行开源,供大家交流学习。

一、项目简介

本项目最终达到的效果为手势控制操作鼠标。如下所示

项目用python实现,调用opencv,mediapipe等库,由以下步骤组成:

1、使用OpenCV读取摄像头视频流;

2、识别手掌关键点像素坐标;

3、根据坐标计算不同的手势模式

4、控制对应的鼠标操作:移动、单击、双击、右击、向上滑、向下滑、拖拽

二、代码详解

main.py

更多疑问,欢迎私信交流。thujiang000导入OpenCV,用于图像处理,图像显示importcv2导入mediapipe,手势识别importhandProcess导入其他依赖包importtimeimportnumpyasnp鼠标控制包importpyautoguifromutilsimportUtilsimportautopy识别控制类classVirtualMouse:def__init__(self):image实例,以便另一个类调用self.image=None主函数defrecognize(self):调用手势识别类handprocess=handProcess.HandProcess(False,1)初始化基础工具:绘制图像,绘制文本等utils=Utils()计时,用于帧率计算fpsTime=time.time()初始化OpenCV对象,为了获取usb摄像头的图像cap=cv2.VideoCapture(0)视频分辨率resize_w=960resize_h=720控制边距frameMargin=100利用pyautogui库获取屏幕尺寸screenWidth,screenHeight=pyautogui.size()柔和处理参数,使鼠标运动平滑stepX,stepY=0,0finalX,finalY=0,0smoothening=7事件触发需要的时间,初始化为0action_trigger_time={single_click:0,double_click:0,right_click:0}用此变量记录鼠标是否处于按下状态mouseDown=Falsewhilecap.isOpened():只要相机持续打开,则不间断循环action_zh=获取视频的一帧图像,返回值两个。第一个为判断视频是否成功获取。第二个为获取的图像,若未成功获取,返回nonesuccess,self.image=cap.read()修改图片大小self.image=cv2.resize(self.image,(resize_w,resize_h))视频获取为空的话,进行下一次循环,重新捕捉画面ifnotsuccess:print("空帧")continue将图片格式设置为只读状态,可以提高图片格式转化的速度self.image.flags.writeable=False将BGR格式存储的图片转为RGBself.image=cv2.cvtColor(self.image,cv2.COLOR_BGR2RGB)镜像,需要根据镜头位置来调整self.image=cv2.flip(self.image,1)使用mediapipe,将图像输入手指检测模型,得到结果self.image=handprocess.processOneHand(self.image)将手框出来cv2.rectangle(self.image,(frameMargin,frameMargin),(resize_w-frameMargin,resize_h-frameMargin),(255,0,255),2)调用手势识别文件获取手势动作self.image,action,key_point=handprocess.checkHandAction(self.image,drawKeyFinger=True)通过手势识别得到手势动作,将其画在图像上显示action_zh=handprocess.action_labels[action]ifkey_point:np.interp为插值函数,简而言之,看key_point[0]的值在(frameMargin, resize_w - frameMargin)中所占比例,然后去(0, screenWidth)中线性寻找相应的值,作为返回值利用插值函数,输入手势食指的位置,找到其在框中的百分比,等比例映射到x3=np.interp(key_point[0],(frameMargin,resize_w-frameMargin),(0,screenWidth))y3=np.interp(key_point[1],(frameMargin,resize_h-frameMargin),(0,screenHeight))柔和处理,通过限制步长,让鼠标移动平滑finalX=stepX+(x3-stepX)/smootheningfinalY=stepY+(y3-stepY)/smoothening计时停止,用于计算帧率now=time.time()判断鼠标为点击状态ifaction_zh==鼠标拖拽:如果鼠标上一个状态不是点击,则判断为按下鼠标ifnotmouseDown:pyautogui.mouseDown(button=left)mouseDown=Trueautopy.mouse.move(finalX,finalY)else:如果鼠标上一个状态是点击,则判断为放开鼠标左键ifmouseDown:pyautogui.mouseUp(button=left)mouseDown=False根据识别得到的鼠标手势,控制鼠标做出相应的动作ifaction_zh==鼠标移动:autopy.mouse.move(finalX,finalY)elifaction_zh==单击准备:passelifaction_zh==触发单击and(now-action_trigger_time[single_click]>0.3):pyautogui.click()action_trigger_time[single_click]=nowelifaction_zh==右击准备:passelifaction_zh==触发右击and(now-action_trigger_time[right_click]>2):pyautogui.click(button=right)action_trigger_time[right_click]=nowelifaction_zh==向上滑页:pyautogui.scroll(30)elifaction_zh==向下滑页:pyautogui.scroll(-30)stepX,stepY=finalX,finalYself.image.flags.writeable=Trueself.image=cv2.cvtColor(self.image,cv2.COLOR_RGB2BGR)显示刷新率FPScTime=time.time()fps_text=1/(cTime-fpsTime)fpsTime=cTimeself.image=utils.cv2AddChineseText(self.image,"帧率: "+str(int(fps_text)),(10,30),textColor=(255,0,255),textSize=50)显示画面self.image=cv2.resize(self.image,(resize_w//2,resize_h//2))cv2.imshow(virtual mouse,self.image)等待5毫秒,判断按键是否为Esc,判断窗口是否正常,来控制程序退出ifcv2.waitKey(5)&0xFF==27:breakcap.release()开始程序control=VirtualMouse()control.recognize()handprocess.py

更多疑问,欢迎私信交流。thujiang000

导入OpenCV

import cv2

导入mediapipe

import mediapipe as mp

import time

import math

import numpy as np

from utils import Utils

class HandProcess:

def __init__(self,static_image_mode=False,max_num_hands=2):

参数

self.mp_drawing = mp.solutions.drawing_utils 初始化medialpipe的画图函数

self.mp_drawing_styles = mp.solutions.drawing_styles

self.mp_hands = mp.solutions.hands 初始化手掌检测对象

调用mediapipe的Hands函数,输入手指关节检测的置信度和上一帧跟踪的置信度,输入最多检测手的数目,进行关节点检测

self.hands = self.mp_hands.Hands(static_image_mode=static_image_mode,

min_detection_confidence=0.7,

min_tracking_confidence=0.5,

max_num_hands=max_num_hands)

初始化一个列表来存储

self.landmark_list = []

定义所有的手势动作对应的鼠标操作

self.action_labels = {

none: 无,

move: 鼠标移动,

click_single_active: 触发单击,

click_single_ready: 单击准备,

click_right_active: 触发右击,

click_right_ready: 右击准备,

scroll_up: 向上滑页,

scroll_down: 向下滑页,

drag: 鼠标拖拽

}

self.action_deteted =

检查左右手在数组中的index

def checkHandsIndex(self,handedness):

判断数量

if len(handedness) == 1:

handedness_list = [handedness[0].classification[0].label]

else:

handedness_list = [handedness[0].classification[0].label,handedness[1].classification[0].label]

return handedness_list

计算两点点的距离

def getDistance(self,pointA,pointB):

math.hypot为勾股定理计算两点长度的函数,得到食指和拇指的距离

return math.hypot((pointA[0]-pointB[0]),(pointA[1]-pointB[1]))

获取手指在图像中的坐标

def getFingerXY(self,index):

return (self.landmark_list[index][1],self.landmark_list[index][2])

将手势识别的结果绘制到图像上

def drawInfo(self,img,action):

thumbXY,indexXY,middleXY = map(self.getFingerXY,[4,8,12])

if action == move:

img = cv2.circle(img,indexXY,20,(255,0,255),-1)

elif action == click_single_active:

middle_point = int(( indexXY[0]+ thumbXY[0])/2),int(( indexXY[1]+ thumbXY[1] )/2)

img = cv2.circle(img,middle_point,30,(0,255,0),-1)

elif action == click_single_ready:

img = cv2.circle(img,indexXY,20,(255,0,255),-1)

img = cv2.circle(img,thumbXY,20,(255,0,255),-1)

img = cv2.line(img,indexXY,thumbXY,(255,0,255),2)

elif action == click_right_active:

middle_point = int(( indexXY[0]+ middleXY[0])/2),int(( indexXY[1]+ middleXY[1] )/2)

img = cv2.circle(img,middle_point,30,(0,255,0),-1)

elif action == click_right_ready:

img = cv2.circle(img,indexXY,20,(255,0,255),-1)

img = cv2.circle(img,middleXY,20,(255,0,255),-1)

img = cv2.line(img,indexXY,middleXY,(255,0,255),2)

return img

返回手掌各种动作

def checkHandAction(self,img,drawKeyFinger=True):

upList = self.checkFingersUp()

action = none

if len(upList) == 0:

return img,action,None

侦测距离

dete_dist = 100

中指

key_point = self.getFingerXY(8)

移动模式:单个食指在上,鼠标跟随食指指尖移动,需要smooth处理防抖

if (upList == [0,1,0,0,0]):

action = move

单击:食指与拇指出现暂停移动,如果两指捏合,触发单击

if (upList == [1,1,0,0,0]):

l1 = self.getDistance(self.getFingerXY(4),self.getFingerXY(8))

action = click_single_active if l1 < dete_dist else click_single_ready

右击:食指、中指出现暂停移动,如果两指捏合,触发右击

if (upList == [0,1,1,0,0]):

l1 = self.getDistance(self.getFingerXY(8),self.getFingerXY(12))

action = click_right_active if l1 < dete_dist else click_right_ready

向上滑:五指向上

if (upList == [1,1,1,1,1]):

action = scroll_up

向下滑:除拇指外四指向上

if (upList == [0,1,1,1,1]):

action = scroll_down

拖拽:拇指、食指外的三指向上

if (upList == [0,0,1,1,1]):

换成中指

key_point = self.getFingerXY(12)

action = drag

根据动作绘制相关点

img = self.drawInfo(img,action) if drawKeyFinger else img

self.action_deteted = self.action_labels[action]

return img,action,key_point

返回向上手指的数组

def checkFingersUp(self):

fingerTipIndexs = [4,8,12,16,20]

upList = []

if len(self.landmark_list) == 0:

return upList

拇指,比较x坐标

if self.landmark_list[fingerTipIndexs[0]][1] < self.landmark_list[fingerTipIndexs[0]-1][1]:

upList.append(1)

else:

upList.append(0)

其他指头,比较Y坐标

for i in range(1,5):

if self.landmark_list[fingerTipIndexs[i]][2] < self.landmark_list[fingerTipIndexs[i]-2][2]:

upList.append(1)

else:

upList.append(0)

return upList

分析手指的位置,得到手势动作

def processOneHand(self,img,drawBox=True,drawLandmarks=True):

utils = Utils()

results = self.hands.process(img)

self.landmark_list = []

if results.multi_hand_landmarks:

for hand_index,hand_landmarks in enumerate(results.multi_hand_landmarks):

if drawLandmarks:

self.mp_drawing.draw_landmarks(

img,

hand_landmarks,

self.mp_hands.HAND_CONNECTIONS,

self.mp_drawing_styles.get_default_hand_landmarks_style(),

self.mp_drawing_styles.get_default_hand_connections_style())

遍历landmark

for landmark_id, finger_axis in enumerate(hand_landmarks.landmark):

h,w,c = img.shape

p_x,p_y = math.ceil(finger_axis.x * w), math.ceil(finger_axis.y * h)

self.landmark_list.append([

landmark_id, p_x, p_y,

finger_axis.z

])

框框和label

if drawBox:

x_min,x_max = min(self.landmark_list,key=lambda i : i[1])[1], max(self.landmark_list,key=lambda i : i[1])[1]

y_min,y_max = min(self.landmark_list,key=lambda i : i[2])[2], max(self.landmark_list,key=lambda i : i[2])[2]

img = cv2.rectangle(img,(x_min-30,y_min-30),(x_max+30,y_max+30),(0, 255, 0),2)

img = utils.cv2AddChineseText(img, self.action_deteted, (x_min-20,y_min-120), textColor=(255, 0, 255), textSize=60)

return imgutils.py

更多疑问,欢迎私信交流。thujiang000

导入PIL,用于图像处理

from PIL import Image, ImageDraw, ImageFont

导入OpenCV,用于图像处理

import cv2

import numpy as np

class Utils:

def __init__(self):

pass

添加中文

def cv2AddChineseText(self,img, text, position, textColor=(0, 255, 0), textSize=30):

if (isinstance(img, np.ndarray)): 判断是否OpenCV图片类型

img = Image.fromarray(cv2.cvtColor(img, cv2.COLOR_BGR2RGB))

创建一个可以在给定图像上绘图的对象

draw = ImageDraw.Draw(img)

字体的格式

fontStyle = ImageFont.truetype(

"./fonts/simsun.ttc", textSize, encoding="utf-8")

绘制文本

draw.text(position, text, textColor, font=fontStyle)

转换回OpenCV格式

return cv2.cvtColor(np.asarray(img), cv2.COLOR_RGB2BGR)